Introduction

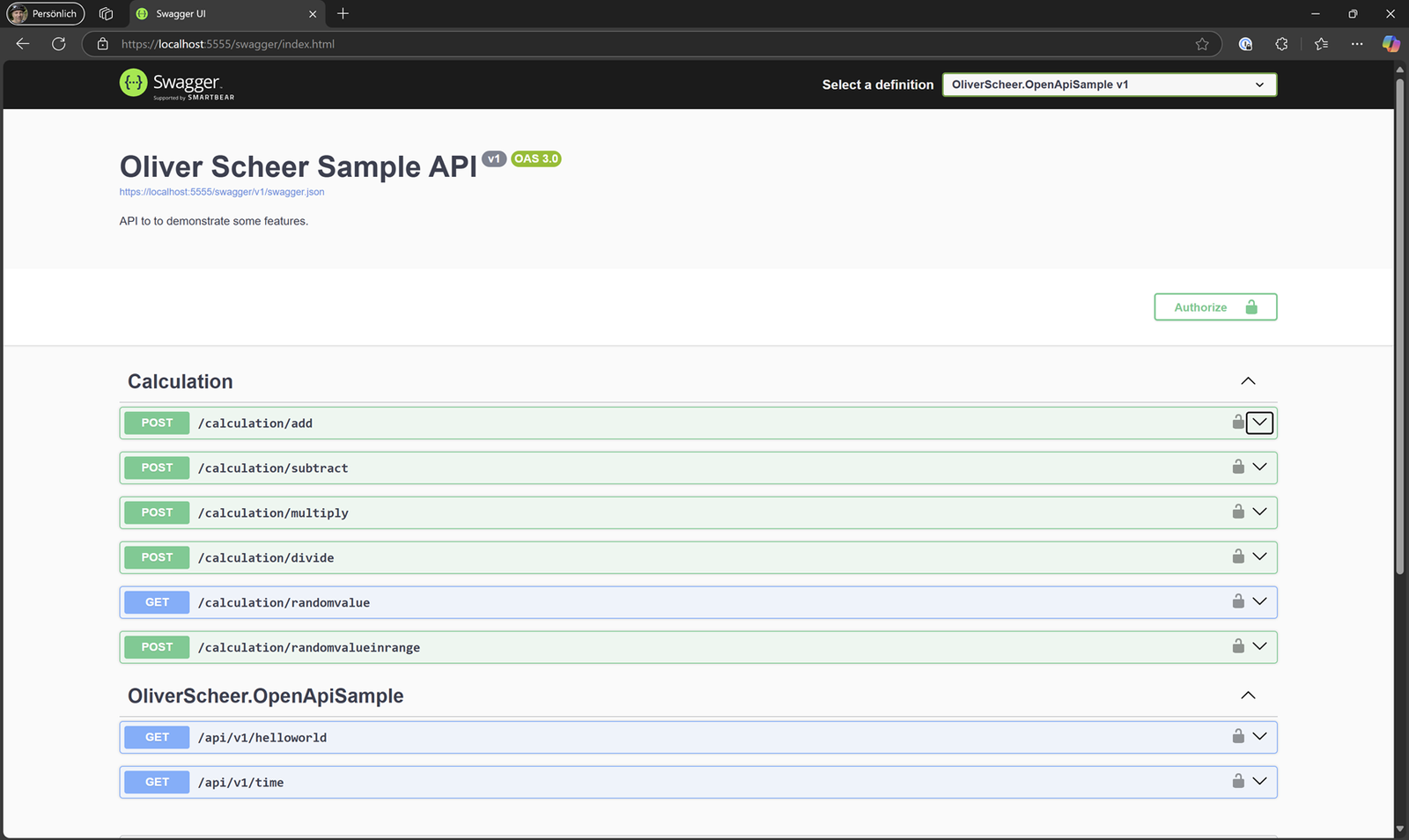

In modern .NET projects, API documentation is a must. While Swagger has become the default choice, Scalar offers a clean, minimal alternative with powerful customization options. In this tutorial, we’ll walk through how to integrate Scalar into a containerized .NET 8 API that runs as part of an Aspire.NET app.

This article is tailored for experienced developers looking to modernize their API tooling in a cloud-native setup. You’ll learn:

- How to replace Swagger with Scalar in your ASP.NET Core app

- How to expose Scalar in a containerized setup using Aspire.NET

- How to fine-tune Scalar’s behavior using

MapScalarApiReference

Problem & Context

Swagger is ubiquitous, but not always lightweight. Scalar steps in as a faster, more minimal alternative. In a recent microservices project based on Aspire.NET, we wanted to:

- Provide clean OpenAPI documentation

- Run the documentation UI within the API container

- Keep the developer experience seamless via Aspire Dashboard

Scalar supports all of this out-of-the-box – but setting it up in an Aspire app (with containerization) needs a few deliberate steps.

Solution: Integrating Scalar in a Containerized API

Step 1: Install Scalar

First, add the Scalar NuGet package to your ASP.NET Core API project:

dotnet add package Scalar.AspNetCore

Ensure that you’re also adding OpenAPI generation:

builder.Services.AddOpenApi();

This enables the OpenAPI spec that Scalar will use under the hood.

Step 2: Configure Scalar Middleware

In your Program.cs, hook up Scalar using MapScalarApiReference inside the if (app.Environment.IsDevelopment()) block:

app.MapOpenApi();

app.MapScalarApiReference(options =>

{

List<ScalarServer> servers = [];

string? httpsPort = Environment.GetEnvironmentVariable("ASPNETCORE_HTTPS_PORT");

if (httpsPort is not null)

{

servers.Add(new ScalarServer($"https://localhost:{httpsPort}"));

}

string? httpPort = Environment.GetEnvironmentVariable("ASPNETCORE_HTTP_PORT");

if (httpPort is not null)

{

servers.Add(new ScalarServer($"http://localhost:{httpPort}"));

}

options.Servers = servers;

options.Title = "Brickcity Story Management API";

options.ShowSidebar = true;

});

This setup ensures that Scalar dynamically detects the correct port – even when running in a container.

Step 3: Configure Launch Settings

Make sure your launchSettings.json exposes the right ports and opens the Scalar UI:

"launchUrl": "scalar",

"applicationUrl": "https://localhost:7154;http://localhost:5185",

In your Docker profile:

"environmentVariables": {

"ASPNETCORE_HTTPS_PORTS": "8081",

"ASPNETCORE_HTTP_PORTS": "8080"

},

"launchUrl": "{Scheme}://{ServiceHost}:{ServicePort}/scalar",

"publishAllPorts": true

These environment variables are picked up by the middleware to build the correct OpenAPI server URLs.

Step 4: Connect via Aspire

In your AppHost project, reference the API project like this:

IResourceBuilder<ProjectResource> apiStory = builder

.AddProject<Projects.BrickCity_Api_StoryManagement>("api-story-management");

Aspire takes care of service discovery, dashboard integration, and default port mapping. You should now be able to access the Scalar UI directly via the Aspire dashboard or by browsing to:

http://localhost:<assigned-port>/scalar

Use Case: Brickcity Story Management API

In our case, we applied this setup to the Brickcity Story Management API. The API runs as a containerized service managed by Aspire, and developers get instant access to the documentation UI locally via /scalar. Thanks to the flexible port handling, the experience is seamless even in multi-service environments.

Performance-wise, Scalar loads faster than Swagger and keeps the focus on the essentials – ideal for internal APIs and rapid iterations.

Conclusion & Takeaways

Scalar is a great replacement for Swagger if you value speed and simplicity. Combined with Aspire.NET, it fits naturally into modern, containerized .NET environments.

Key Learnings:

- Use

MapScalarApiReference()to fine-tune behavior - Pass HTTP/HTTPS ports via env vars for container compatibility

- Let Aspire handle service wiring and discovery

- Keep your dev loop fast with

launchUrl: "scalar"

What’s next?

Have you already tried Scalar in production? Curious how it behaves with versioned APIs? Let’s discuss!

For more insights, check out:

![Simplify Debugging with the [DebuggerDisplay] Attribute in C#](https://oliverscheer.tech/wp-content/uploads/2024/12/image-3.png)